Classic Recommendation.

The Technological Future of Digital Handheld Control

The handheld controller, the primary tool for navigating and dominating digital worlds, is entering a fascinating new phase of development. Moving beyond the established dual-stick, four-face-button layout, the next generation of these devices is poised to integrate sophisticated sensors and adaptive mechanics that promise to redefine the very nature of human-computer interaction in the context of play. The focus is shifting from simple command execution to a more organic, responsive, and adaptive partnership with the user.

One of the most significant technological advancements is the adoption of Hall Effect sensing for thumbsticks and triggers. Traditional potentiometers, which rely on physical contact and friction, are prone to gradual degradation leading to the widely discussed phenomenon of unintended input (often referred to as ‘drift’). Hall Effect sensors, conversely, use magnetic fields to determine position. Because this mechanism involves no physical wear and tear on the core sensing components, it offers virtually limitless durability and consistent accuracy. This change addresses a major longevity and reliability concern, providing a foundational upgrade for competitive and dedicated players who demand faultless performance over thousands of hours of use.

Furthermore, the sophistication of haptic feedback is set to increase exponentially. Current high-definition vibration technology already delivers localized, textured feedback, but the next step involves Force Feedback and Adaptive Resistance integrated into key moving parts, particularly the shoulder triggers. This technology allows the device to dynamically alter the tension and resistance of the triggers to simulate real-world physical actions. For example, pulling the trigger of a shotgun may feel heavy and slow, while firing a light automatic mechanism would offer a quick, light pull and a rapid, mechanical click. In vehicle simulations, the triggers could communicate a gear grind or the slipping of tires through measured, subtle resistance. This is more than mere vibration; it is active mechanical communication that simulates inertia, tension, and texture, fundamentally changing how digital physics are experienced.

Another promising avenue of development lies in the area of enhanced user biometrics and state awareness. Future devices may incorporate subtle sensors capable of monitoring physiological data, such as heart rate or electrodermal activity. This information, if integrated responsibly and ethically, could allow the entertainment system to dynamically adjust aspects of the experience—like difficulty, ambient sound design, or pacing—to either calm or intensify the player’s emotional state. Imagine an environment that becomes subtly more aggressive as your pulse rises, or a puzzle that offers a small hint if it detects heightened frustration. This creates a deeply personalized and physiologically responsive interactive loop.

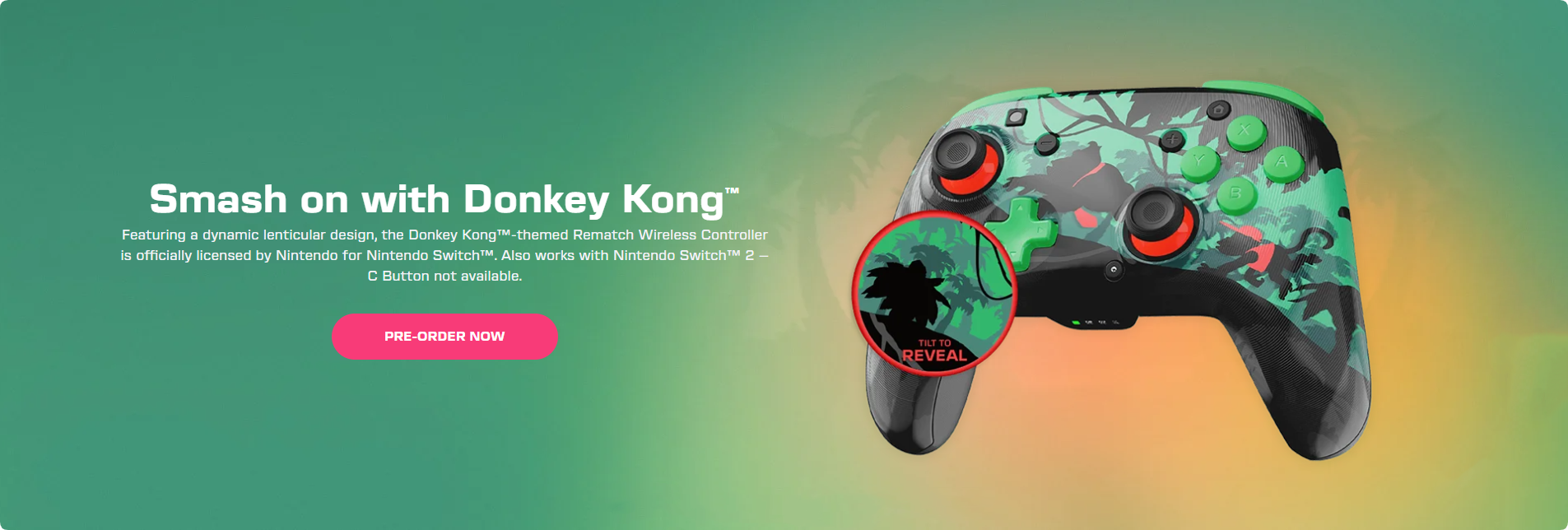

Beyond traditional handheld forms, the boundary between the traditional controller and emerging interfaces is blurring. The rise of immersive realities (both augmented and virtual) necessitates new forms of interaction that track hand movement, finger dexterity, and spatial positioning with extremely high precision. The insights gained from developing high-accuracy, low-latency tracking systems for these new realities are already influencing traditional device design, leading to the integration of gyroscopic and accelerometer sensors that allow for intuitive, small-scale motion control to complement button inputs.

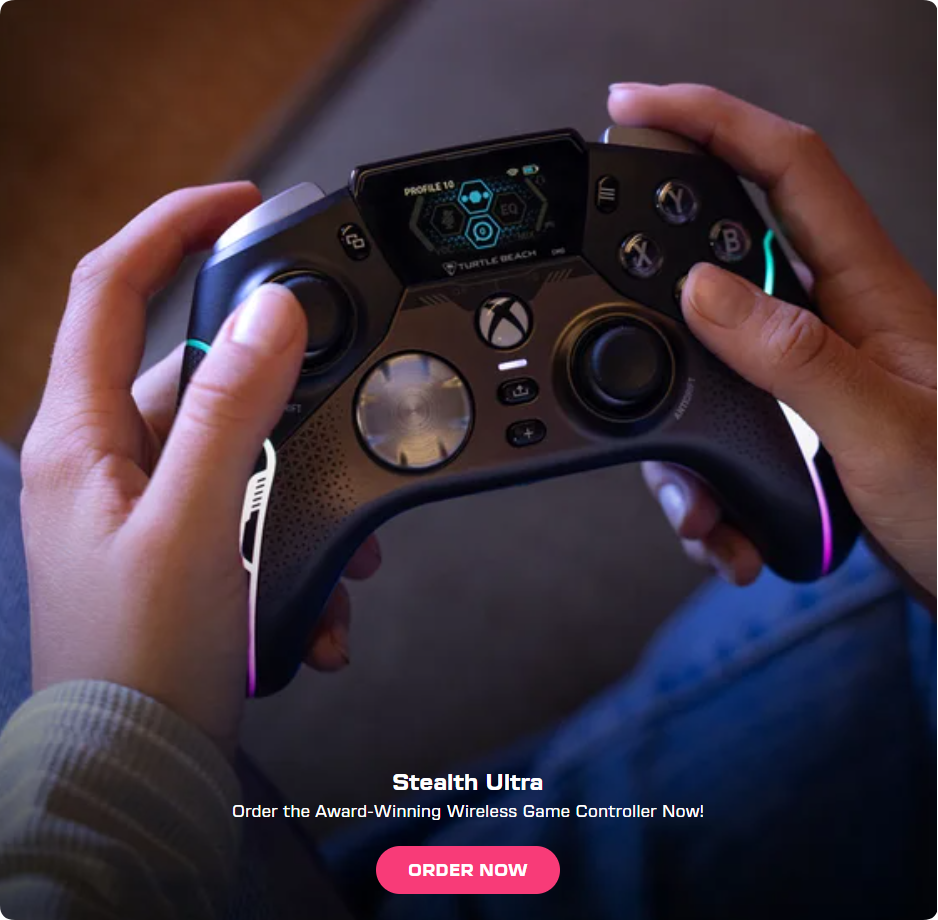

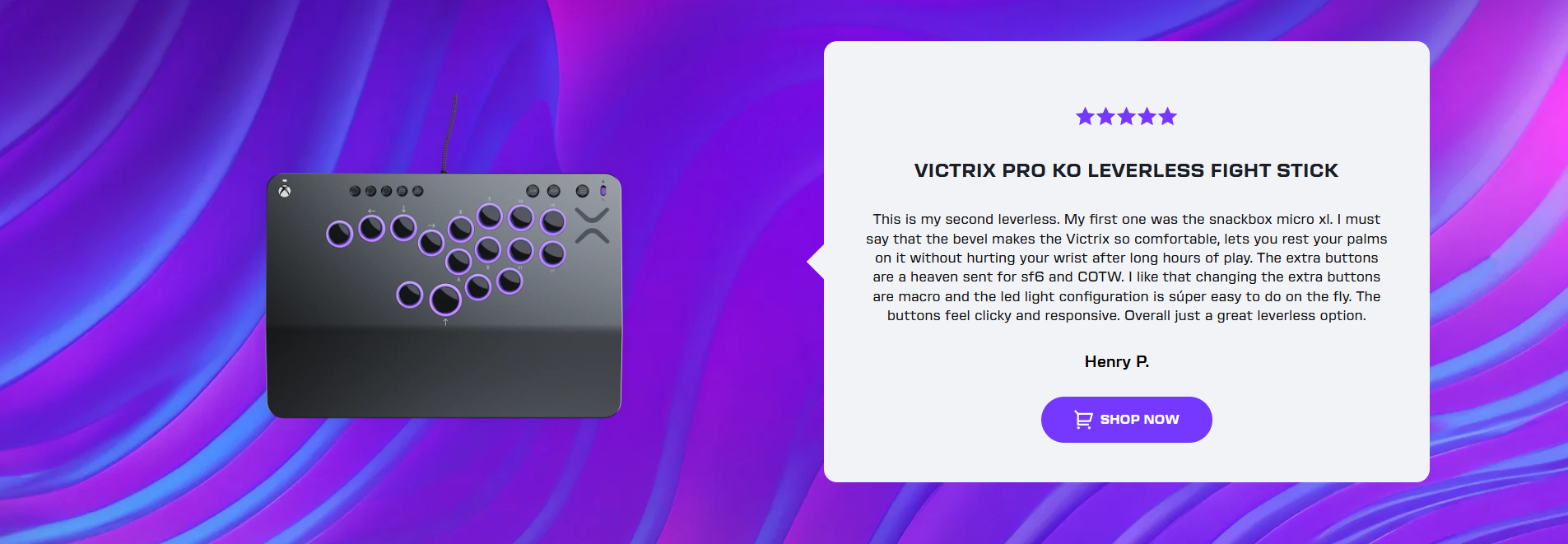

Finally, the trend toward deep system-level customization will continue to evolve. Devices are increasingly being built with open, modular architectures. This not only includes the ability to swap physical components, but also the capacity for sophisticated, on-board macro programming, profile storage, and AI-enhanced calibration. Users may be able to run diagnostic routines that automatically tune dead zones, optimize latency, and even analyze their personal playstyle to suggest optimal sensitivity settings, bringing a high-level of technical optimization to the casual user.

In essence, the future of the interactive input device is not just about making the existing form factor better; it is about making the device smarter, more durable, and profoundly more communicative. It will transform from a passive input tool into an active, adaptive co-pilot in the digital experience, pushing the limits of what players can physically and emotionally feel as they interact with the virtual environment.